How to setup an EKS cluster with terraform

Part 2 of the ArgoCD mega tutorial

Introduction

In this blog I will document how to setup a kubernetes cluster using AWS service "EKS" in conjuction with terraform Infrastructure as Code.

For this it is necessary to already know what is terraform, and what is kubernetes, this is a simple guide of how to bring them together.

Since spinning up a kubernetes cluster is not a "small" solution, we are going to use the official terraform module, that already comprises all the resources that you will need (cluster, nodegroup, vpc, subnets, security groups, etc)

Pre-requisites:

| Pre-requisite | Link with external tutorial for this step |

| Have an AWS account | https://signin.aws.amazon.com/signin?redirect_uri=https%3A%2F%2Fportal.aws.amazon.com%2Fbilling%2Fsignup%2Fresume&client_id=signup&code_challenge_method=SHA-256&code_challenge=3qw6itVtqcMg9SrtN2LkXAecc_9JCtHLJwQb9UBFMOY#/start |

| Setup a terraform backend in S3 or terraform cloud as you prefer. | https://github.com/danibyay/aws_terraform_starter_code |

Install kubectl | https://kubernetes.io/docs/tasks/tools/ |

Generate IAM credentials and save them in your local .aws/credentials file | https://docs.aws.amazon.com/IAM/latest/UserGuide/id_credentials_access-keys.html |

Install aws cli | https://docs.aws.amazon.com/cli/latest/userguide/getting-started-install.html |

Get the starter code and customize it

Clone or fork this repository:

https://github.com/hashicorp/learn-terraform-provision-eks-cluster

I will modify the terraform.tf file to include my own backend

Before

terraform {

cloud {

workspaces {

name = "learn-terraform-eks"

}

}

required_providers {

After

terraform {

backend "s3" {

bucket = "danibish-bucket"

key = "eks-cluster-demo/terraform.tfstate"

region = "us-east-1"

dynamodb_table = "dynamodb-state-locking"

}

Replace the data of the backend block with your own

I also moved the provider block from main.tf into terraform.tf to only have resources on the main.tf file. You will see why later on.

provider "aws" {

region = var.region

}

Lastly, I deleted the last three blocks of the main.tf file because for this project I don't need what is related to the ebs csi driver add on. (lines 93 onwards)

Delete this from main.tf:

# https://aws.amazon.com/blogs/containers/amazon-ebs-csi-driver-is-now-generally-available-in-amazon-eks-add-ons/

data "aws_iam_policy" "ebs_csi_policy" {

arn = "arn:aws:iam::aws:policy/service-role/AmazonEBSCSIDriverPolicy"

}

module "irsa-ebs-csi" {

source = "terraform-aws-modules/iam/aws//modules/iam-assumable-role-with-oidc"

version = "4.7.0"

create_role = true

role_name = "AmazonEKSTFEBSCSIRole-${module.eks.cluster_name}"

provider_url = module.eks.oidc_provider

role_policy_arns = [data.aws_iam_policy.ebs_csi_policy.arn]

oidc_fully_qualified_subjects = ["system:serviceaccount:kube-system:ebs-csi-controller-sa"]

}

resource "aws_eks_addon" "ebs-csi" {

cluster_name = module.eks.cluster_name

addon_name = "aws-ebs-csi-driver"

addon_version = "v1.20.0-eksbuild.1"

service_account_role_arn = module.irsa-ebs-csi.iam_role_arn

tags = {

"eks_addon" = "ebs-csi"

"terraform" = "true"

}

}

Run terraform steps locally

At this point, you can apply your configuration.

Let's run

terraform init

This will download the required providers code and initialize your backend

terraform apply

Check out the output of the plan before proceeding with the apply, it can take around 10 minutes to deploy all the necessary resources.

Validate the backend in S3

And now we can also verify that the remote state is in our bucket

Configure your kubectl to connect to the newly created cluster

If you inspected the code, the name of the cluster will have a random suffix. So, to know what the final name of your cluster was, you could go to the AWS console and check it out, or read from the terraform outputs when you did the apply. In any case, you will need that for the next command.

Run the following kubectl command to configure your kubectl client to control your new cluster

aws eks update-kubeconfig --region region-code --name my-cluster

Test your cluster with basic kubectl commands

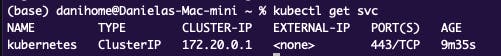

With an "empty" cluster, you will have no pods running, but you can check for the nodes, and for the services.

In this deployment you should have three nodes.

kubectl get nodes

kubectl get svc

Troubleshooting

If the aws cli tells you that the cluster name was not found, you probably have a different default region in your profile in .aws/config than the region the cluster was deployed in.

To fix it, you can change the default region in your config file, or add the argument:

--region us-east-2 to the aws cli command. (Because the starter code will deploy the cluster in us-east-2)

aws eks update-kubeconfig --region region-code --name my-cluster --region us-east-2

Conclusion

You have successfully created a EKS cluster with terraform on your own AWS account, and now you are able to create components such as replica sets, deployments, config-maps, and many more using your terminal. When you are done simply run terraform destroy to make sure you are not spending any extra money.